Most courses that teach Natural Language Processing (NLP) go into a lot of theory before getting into the coding aspects of the concept. I don’t disparage instructors for doing this, as NLP is a complicated topic.

However, I couldn’t help but wonder if I could come up with a quick tutorial that can help people learn the core concepts without going deep into explanations of NLP-specific concepts. That is the purpose of this tutorial.

Caveat: You won’t become an NLP expert from this tutorial, and you should in no way use the solution from this tutorial for NLP applications. The tutorial will help guide you to the workflow that is often involved with NLP projects. Also, the examples are contrived and meant to work out perfectly. NLP libraries are much more sophisticated and can handle exceptional cases. That is their purpose. When you go through this tutorial, though, you’ll see that the steps involved when using NLP libraries won’t be drastically different!

Goals of the Tutorial

Our task for this tutorial is to determine the sentiment of a short paragraph or text block. The goal is to determine the number of positive words in the text and the number of negative words, based on comparing to predefined lists. Then, we’ll apply these counts into a formula that specifies the sentiment of the text block.

We’ll use a list that contains positive words and another list that contains negative words. These lists are not extensive in their use of positive or negative words. They are constructed specifically for this tutorial.

The words in the main text block are checked first in the positive words list. Each word that is found is added into another list called positive. The same technique is used for the negative words.

We’ll also use a list called stop words to strip out common words (called stop words) but it is included only to show that other steps can be used when processing for NLP.

For our scenario, the stop words won’t have any impact on our analysis, i.e., how our sentiment indicator is calculated.

For other scenarios, it would be useful and appropriate to remove stop words. For instance, if you are looking to see frequency of important words, stop words could cloud the analysis. Therefore, removing them makes sense.

Processing the stop words won’t impact the analysis of our sentiment analysis scenario, so going through the exercise is useful to illustrate how it's done for future projects where it may be impactful!

The list of positive words is used to group words from the body of text that would be deemed positive. In NLP terms, this grouping could be loosely (and I mean, very loosely) described as a Bag of Words (BOW).

I won’t go into detail about BOW here as there is much more to the Bag of Words model than this oversimplification. But it’s good to start getting used to the terminology that is used in NLP.

NLP is often implemented using a series of steps. You don’t need to use every step for each implementation, but it’s available if you need it. The decision about which steps to use will depend on the project.

Newer libraries for NLP are adopting a pipeline paradigm, which is by definition, a series of steps. Whatever way you decide to think of it, you’ll apply a series of tasks based on what output your looking to achieve.

Why I Chose Not to Use NLP Packages for This Tutorial

It may seem a bit out of place to create a tutorial about NLP without using any NLP packages, but this was the intent. As mentioned earlier, the NLP libraries would require more explanation. It would defeat the purpose of a quick tutorial.

The package I chose for this tutorial is the string package. If you are an experienced Python programmer, you have likely used this package before. If not, it is intuitive enough to see without much explanation.

Assumptions

This tutorial assumes you are familiar with programming in Python. You don’t need years’ of experience under your belt. But you should understand the following concepts:

- Importing packages (only need the string package for this tutorial)

- Working with the strings package. As mentioned, though, this is intuitive.

- List compreshensions

- Basic Python data types, including lists and strings

- String functions, such as join() and lower()

- Tutorial was developed using Jupyter Notebook on Anaconda.

I have included resources at the end of this tutorial that describes and demonstrates these topics above.

The code for this example is available on GitHub.

Initialization

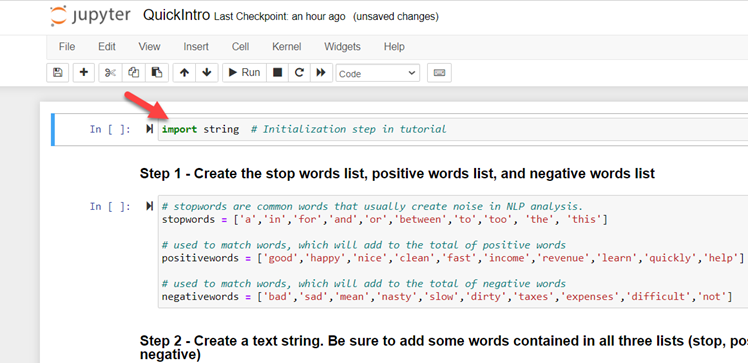

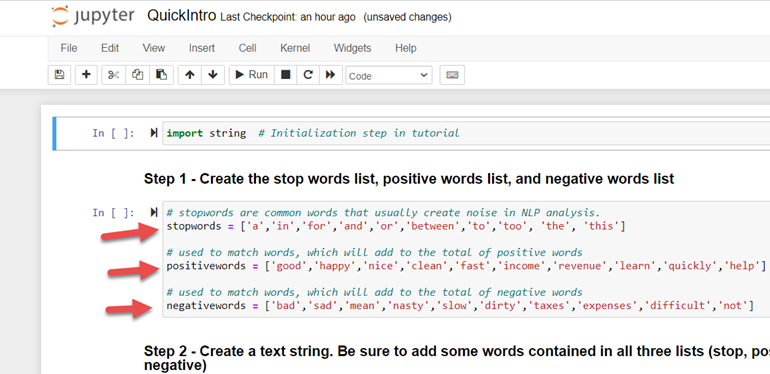

Start by importing the string package. See code.

Steps (each described in further detail in the Analysis section below):

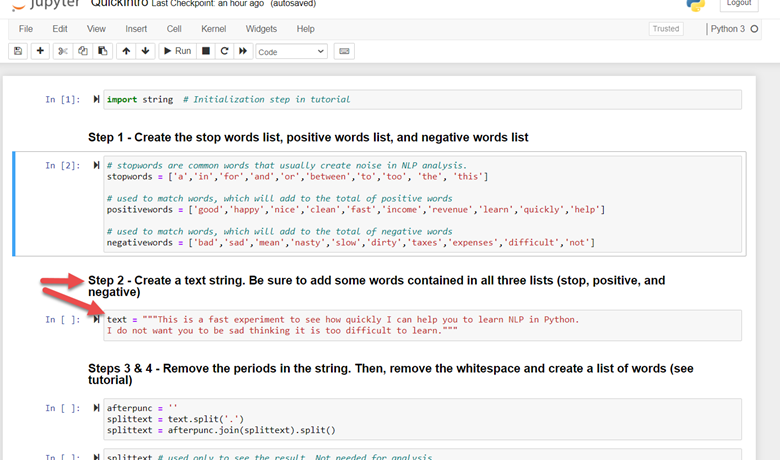

- Create three lists for stop words, positive words, and negative words. Populate these lists with the words shown in the code.

- Create a text string (called text) that contains words in the stop words list, the positive words list, and the negative words list. It can be anything you want, just make sure it has some words contained in each list. See code for one example.

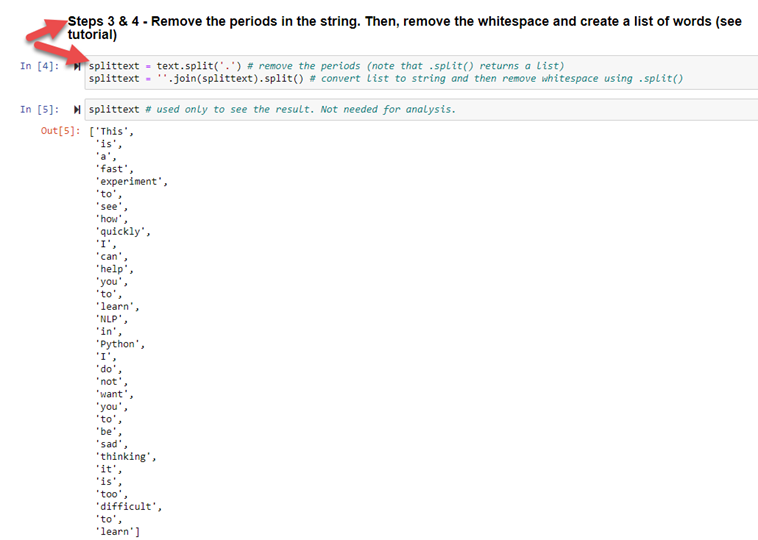

- Split the text, stripping out the periods of the sentences. NOTE: NLP has libraries that can be used to strip out an entire set of punctuation. For this tutorial, we’ll only deal with stripping out the periods.

- Since the split() function returns a list, use join() to make the results a string again. Then, split on whitespace characters (this is the default for the split() function.

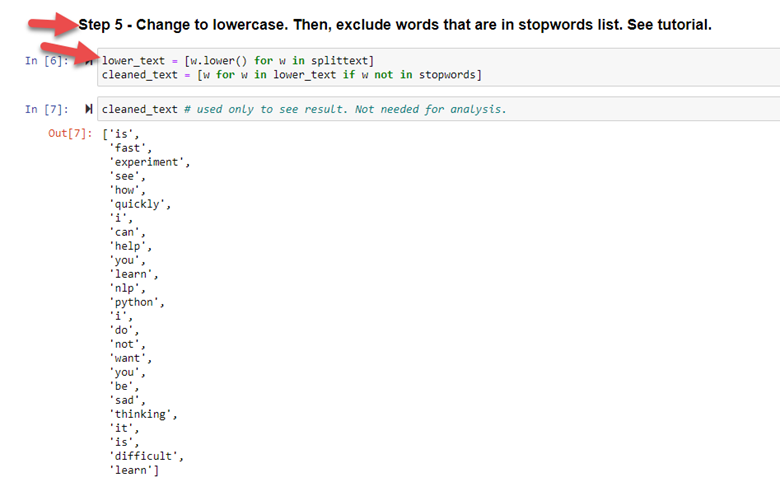

- Change all text to lowercase. Filter out (exclude) the words that are contained in the stop words list. NOTE: with a list comprehension, we can accomplish both of these tasks with two lines of code.

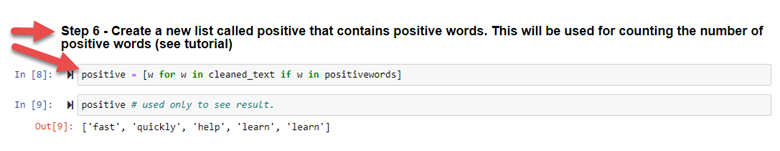

Consider learning comprehensions in Python if you aren’t already familiar with them. They are quite powerful and more efficient than using traditional loops. See resources section at the end of this tutorial. - Create a list of words sourced from the cleaned_text that are contained in the positive words list. Lists allow us to include duplicates, which is our intended goal as we want a count of the number of positive words, even if they repeat.

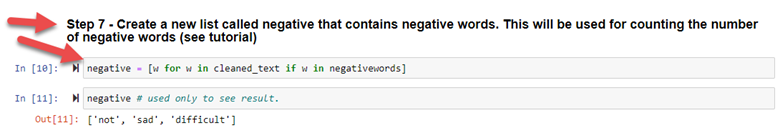

- Create a list of words sourced from the cleaned_text string that contain words from the negative words list. Like the positive words, these should include duplicates.

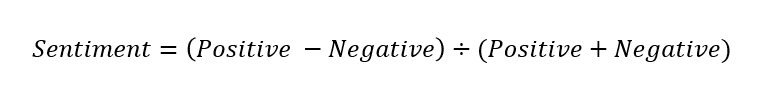

- Determine the sentiment of the list of words by the following formula:

Sentiment = (# of Positive Words - # of Negative Words) / (# of Positive Words + # of Negative Words)

If the sentiment is greater than 0, that indicates a net positive text. If the sentiment is less than 0, then the text is net negative.

You can also use the actual number for the sentiment to indicate how strong the result is either positive or negative. For instance, if the sentiment is positive and is .388, this would indicate that the result is 38% stronger on the positive side, etc.

Analysis

Step 1 - Create three lists of words

The first step is to create three lists. The stop words list (named stopwords) is used to exclude any words in the body of the text that is contained in the stop words list.

Stop words are words that are common and don’t add much to the meaning of the sentence or paragraph, or even document. For statistical analysis on NLP, they tend to muddy the waters of the data.

For instance, if you are looking for frequencies of important words, the results will be skewed by the significant number of the letter “a” or the word “the”, etc.

As a reminder, processing stop words won't affect the analysis in this tutorial.

The positive words and negative words are similar in concept and the explanation for one will carry over to the other. The concept is simple: match each word in the body of the text (after cleaning) with the words in the positive words list. If it exists, add the words to the positive list. Do the same for negative words and negative lists.

In this step (step 1), we create and initialize the three lists:

Step 2 – Create a text string for processing

Create a variable called text and initialize it with a few sentences. The content of the variable can be anything you like, but to get the full benefit of this tutorial, be sure to add a few words that are in the three lists (stopwords, positivewords, negativewords). As mentioned in step 1, using stopwords is not crucial. But the positivewords and negativewords will make a difference if words in the body of the text don’t contain words from these lists. Of course, you are welcome to use the text as defined.

NOTE: if you aren’t familiar with the """ notation in the string, that is a multi-line string definition. When you start and end the string with “”” then you can span multiple lines.

Step 3 – Remove the periods in the sentences. Then remove the whitespaces.

The following bears some explanation.

The first split function removes the periods from the string. The split() function transforms the string into a list. That is the reason for the .join() method – to convert that list into a string.

We need this list to be a string because we also want to apply the split function again to remove whitespaces. We cannot use the split method on the list, so we use the .join() method to convert to a string. Once it’s a string, we use the split method with an empty parameter (which is default whitespace).

The result of the whitespace will once again be list. This time, it will be the format we need for further processing, so we won’t need to join this string. In the NLP parlance, this would be roughly equal to the concept of tokenization.

NOTE: For actual NLP projects, you will probably want to remove all the punctuation. Most NLP libraries can handle this task rather easily. But regular expressions could also be used in the absence of these libraries.

Step 5 - Preprocessing to convert to lowercase and remove stop words

In this step, we’ll convert each element of the list to lowercase letters. Then, we will remove the stop words.

Note that both of these operations use list comprehensions, which is a shorthand form of for loops. See the resources section below for more information on this and other Python constructs.

Step 6 - Process Text for Positive Words

Now that we have a cleaned list, we can search this list to determine which elements are part of the positivewords list. We’ll add these to a new list called positive. A list gives us the ability to include duplicates.

If we didn’t account for duplicates, the word ‘learn’ in our body of text would only be counted once, even though it appears in our text twice. Since 'learn' is included in our positivewords list, it would only get counted once. But it should be counted twice to “strengthen” the positivity of the body of text. A list construct permits us to add duplicates, which is our intended goal.

Step 7 - Process Text for Negative Words

The analysis of this step is exactly the same as the analysis for step 6. The difference is we use the negativewords list and create a new list called negative. This new list will have a count of the negative words in our body of text (including duplicates).

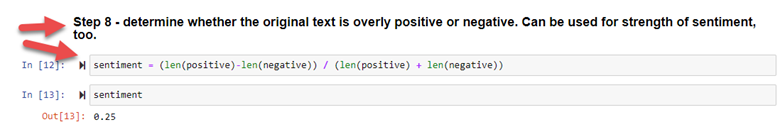

Step 8 - Calculate Sentiment

We now have all we need to satisfy the analysis of our scenario. As mentioned, we are looking to see whether the sentiment of the body of our text is positive and negative. Further, we can also determine how strong the sentiment is whatever it happens to be.

Exercise: before seeing the actual results, try eyeballing the original text and the positivewords and negativewords. Then, guess whether the overall sentiment will be considered positive or negative.

We have the number of positive words and the number of negative words. We can determine the sentiment with the following formula:

The length of each list will give use the proper numbers to calculate the sentiment:

As you can see, the sentiment is greater than zero, which indicates that the overall sentiment is positive. We can also see by the numerical result (0.25) that the strength of the positivity is 25% greater.

Further Considerations

We accomplished a lot in just a few lines of code. But there are some factors to consider before finishing this tutorial.

- The three lists, stopwords, positivewords, and negativewords, are derived solely for the tutorial. These lists would not be useful in a production setting. The creators of popular NLP libraries have put a lot of thought and research as they are experts in the NLP field. Otherwise, practitioners wouldn’t even consider these libraries for their work.

- You’ likely need to remove all punctuation rather than just the period like we did in this tutorial. See the resources for how this can be handled using NLTK’s RegexTokenizer().

- NLTK is one of the first implementations of an NLP package. It was meant for academic study and research. However, many companies feel it is useful for production purposes. Newer to the scene is Spacy which was developed for production use.

The takeaway is these packages are specifically designed for NLP and are well-supported. Unless your goal is to develop a competing NLP package, it is unlikely that coding NLP concepts using the concepts in this tutorial will provide value to your projects. - I have seen the sentiment formula used by practitioners of NLP. However, that doesn’t mean this is the only method of measuring sentiment nor is it necessarily the best. It was chosen for its simplicity.

Resources

If you are unfamiliar with any concepts used in this tutorial, this section will point you to resources that may help. The list comprehensions may be tricky for newer Python coders, but it is well worth the effort to take a few minutes to understand this Python construct. It will serve you well in the future.

Python Lists – a decent tutorial on the basics of lists and the methods to work with them.

List Comprehensions – this resource not only describes how to work with list comprehensions but also describes the benefits of using them.

Multi-line Strings – a quick tutorial on how to implement multi-line strings in Python. You can also test out the concept in code with this resource.

String Methods – explains a few of the methods for string, including lower() which is used in this tutorial.

Convert a List to a String – article describes how to use the .join() method of string to create a string from a list.

Preprocessing Text for NLP – this article covers preprocessing of text using NLTK’s RegexTokenizer(). This is a next-step tutorial to see how one package can be used to help with preparing the text. You’ll notice the steps are similar (in form) to what we did in this tutorial.

Google Research Colab Python Environment – the code in this tutorial was run on a local installation of Jupyter Notebooks (Anaconda). However, I also tested it on a web-based Google Colab installation with no issues. It worked fine. Google Colab requires a valid Google account.

Kaggle's Introduction to NLP - when you're ready to start using the NLP packages the way they were intended, this tutorial will give you a good introduction to the concepts and how the packages are used. This tutorial is free of charge at the time of this writing.